Publications

* equal contribution

2026

- ICLR 2026 (poster)

Relational Feature Caching for Accelerating Diffusion TransformersByunggwan Son*, Jeimin Jeon*, Jeongwoo Choi* , and Bumsub HamIn International Conference on Learning Representations (ICLR), 2026

Relational Feature Caching for Accelerating Diffusion TransformersByunggwan Son*, Jeimin Jeon*, Jeongwoo Choi* , and Bumsub HamIn International Conference on Learning Representations (ICLR), 2026Feature caching approaches accelerate diffusion transformers (DiTs) by storing the output features of computationally expensive modules at certain timesteps, and exploiting them for subsequent steps to reduce redundant computations. Recent forecasting-based caching approaches employ temporal extrapolation techniques to approximate the output features with cached ones. Although effective, relying exclusively on temporal extrapolation still suffers from significant prediction errors, leading to performance degradation. Through a detailed analysis, we find that 1) these errors stem from the irregular magnitude of changes in the output features, and 2) an input feature of a module is strongly correlated with the corresponding output. Based on this, we propose relational feature caching (RFC), a novel framework that leverages the input-output relationship to enhance the accuracy of the feature prediction. Specifically, we introduce relational feature estimation (RFE) to estimate the magnitude of changes in the output features from the inputs, enabling more accurate feature predictions. We also present relational cache scheduling (RCS), which estimates the prediction errors using the input features and performs full computations only when the errors are expected to be substantial. Extensive experiments across various DiT models demonstrate that RFC consistently outperforms prior approaches significantly. We will release our code publicly upon acceptance.

@inproceedings{son2026relational, title = {Relational Feature Caching for Accelerating Diffusion Transformers}, author = {Son, Byunggwan and Jeon, Jeimin and Choi, Jeongwoo and Ham, Bumsub}, booktitle = {International Conference on Learning Representations (ICLR)}, year = {2026}, }

2025

- NeurIPS 2025 (poster)

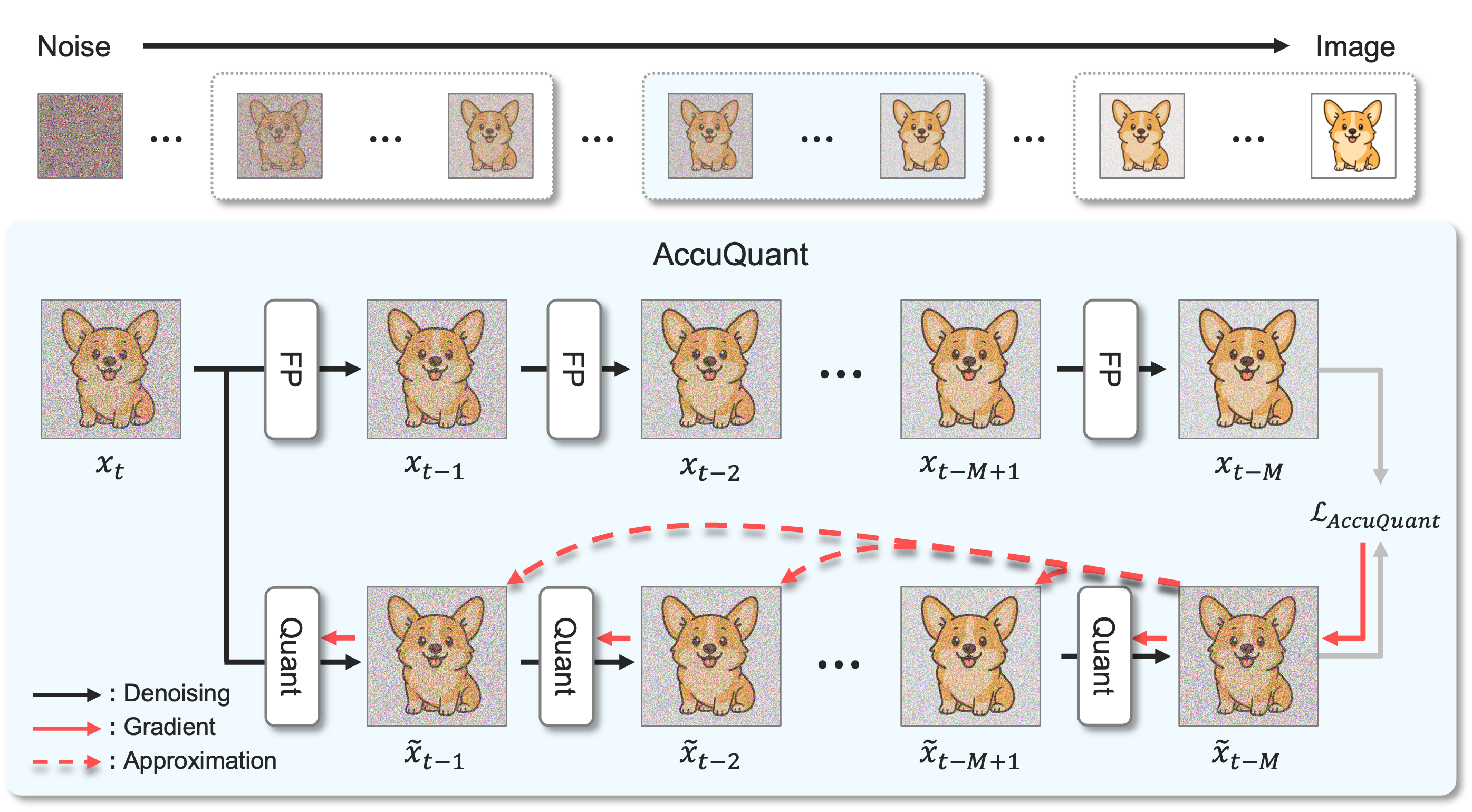

AccuQuant: Simulating Multiple Denoising Steps for Quantizing Diffusion ModelsSeunghoon Lee*, Jeongwoo Choi* , Byunggwan Son, Jaehyeon Moon, Jeimin Jeon, and Bumsub HamIn Conference on Neural Information Processing Systems (NeurIPS), 2025

AccuQuant: Simulating Multiple Denoising Steps for Quantizing Diffusion ModelsSeunghoon Lee*, Jeongwoo Choi* , Byunggwan Son, Jaehyeon Moon, Jeimin Jeon, and Bumsub HamIn Conference on Neural Information Processing Systems (NeurIPS), 2025We present in this paper a novel post-training quantization (PTQ) method, dubbed AccuQuant, for diffusion models. We show analytically and empirically that quantization errors for diffusion models are accumulated over denoising steps in a sampling process. To alleviate the error accumulation problem, AccuQuant mini5 mizes the discrepancies between outputs of a full-precision diffusion model and its quantized version within a couple of denoising steps. That is, it simulates multiple denoising steps of a diffusion sampling process explicitly for quantization, account8 ing the accumulated errors over multiple denoising steps, which is in contrast to previous approaches to imitating a training process of diffusion models, namely, minimizing the discrepancies independently for each step. We also present an efficient implementation technique for AccuQuant, together with a novel objective, which reduces a memory complexity significantly from O(n) to O(1), where n is the number of denoising steps. We demonstrate the efficacy and efficiency of AccuQuant across various tasks and diffusion models on standard benchmarks.

@inproceedings{lee2025accuquant, title = {AccuQuant: Simulating Multiple Denoising Steps for Quantizing Diffusion Models}, author = {Lee, Seunghoon and Choi, Jeongwoo and Son, Byunggwan and Moon, Jaehyeon and Jeon, Jeimin and Ham, Bumsub}, booktitle = {Conference on Neural Information Processing Systems (NeurIPS)}, year = {2025}, }